CapLoader 1.9.5 Alerts on Malicious Traffic

CapLoader 1.9.5 was released today!

The most important addition in the 1.9.5 release is the new Alerts tab, in which CapLoader warns about malicious network traffic such as command-and-control protocols. The alerts tab also shows information about network anomalies that often are related to malicious traffic, such as periodic connections to a particular service or long running sessions.

Other additions in this new version are:

- BPF support for “vlan” keyword, for example “vlan”, “not vlan” or “vlan 121”

- Support for nanosecond PCAP files (magic 0xa1b23c4d)

- Support for FRITZ!Box PCAP files (magic 0xa1b2cd34)

- Decapsulation of CAPWAP protocol, so that flows inside CAPWAP can be viewed and filtered on

- Domain names extracted from TLS SNI extensions

Alerts for Malicious Network Traffic

As you can see in the video at the end of this blog post, the Alert tab is a fantastic addition for everyone who wants to detect malicious activity in network traffic. Not only can it alert on over 30 different malicious command-and-control (C2) protocols — including Cerber, Gozi ISFB, IcedID, RedLine Stealer, njRAT and QakBot — it also alerts on generic behavior that is typically seen in malware traffic. Examples of such generic behavior are periodic connections to a C2 server or long running TCP connections. This type of behavioral analysis can be used to detect C2 and backdoor traffic even when the protocol is unknown. There are also signatures that detect “normal” protocols, such as HTTP, TLS or SSH running on non-standard ports as well as the reverse, where a standard port like TCP 443 is carrying a protocol that isn’t TLS.

Many of CapLoader’s alert signatures are modeled after threat hunting techniques, which can be used to detect malicious activities that traditional alerting mechanisms like antivirus, EDR’s and IDS’s might have missed. By converting the logic involved in such threat hunting tasks into signatures a great deal of the analysts’ time can be saved. In this sense part of CapLoader’s alerting mechanism is a form of automated threat hunting, which saves several steps in the process of finding malicious network traffic in a packet haystack.

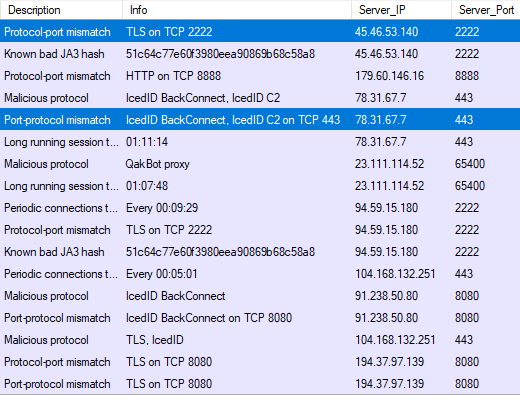

Watch my Hunting for C2 Traffic video for a demonstration on the steps required to perform manual network based threat hunting without CapLoader's alerts tab. In that video I identify TLS traffic to a non-TLS port (TCP 2222) as well as non-TLS traffic to TCP port 443. As of version 1.9.5 CapLoader automatically generates alerts for that type of traffic. More specifically, the alert types will be Protocol-port mismatch (TLS on TCP 2222) and Port-protocol mismatch (non-TLS on TCP 443). Below is a screenshot of CapLoader’s new Alerts tab after having loaded the capture files analyzed in the Hunting for C2 Traffic video.

Image: Alerts for malicious traffic in CapLoader 1.9.5.

Video Demonstration of CapLoader's Alerts Tab

The best way to explain the power of CapLoader’s Alerts tab is probably by showing it in action. I have therefore recorded the following video demonstration.

The PCAP file analyzed in the video can be downloaded from here:

https://media.netresec.com/pcap/McDB_150724-18-22_FpF90.pcap

This capture file is a small snippet of the network traffic analyzed in one of my old network forensics classes. It contains malicious traffic from njRAT and Kovter mixed with a great deal of legitimate web traffic.

Posted by Erik Hjelmvik on Thursday, 09 February 2023 14:30:00 (UTC/GMT)

Tags: #CapLoader #Video #njRAT #Threat Hunting

Image:

Image: